One of the best things about AWS Lambda is the variety of ways that you can create a serverless function. For example, you can dive right into the console, or use approaches like Chalice or the Serverless Framework, to name a few. For me, the latest way, announced at re:Invent 2020, is the most efficient way of testing your serverless function locally and dealing with large or awkward dependencies in your code. As you’d expect, this works perfectly with AWS SAM. This post will give you a quickstart into what you need to do to build a container-based function using containers & Python.

In my case, I was building a function that would read data from Firestore in Google Cloud, run a data transformation and store the result in S3. I used to swear by Chalice, and built my application but found that it couldn’t bundle the GRPC dependency - I would have to build it myself. While the solution to this is just to build the dependency and package it up in the vendor directory of my Chalice application, I used the opportunity to finally try out container support.

Prerequisites

If you’re reading this, you’re probably using Python already. As well as having Python installed, you’ll need to have Docker too.

Building a Docker container with dependencies

As always, the AWS documentation will guide you through the basics.

First you’ll need to install the Python runtime interface client using pip install awslamdaric

Next, create a Dockerfile that references the base image you are using. In the case below, I was using Python 3.8

FROM public.ecr.aws/lambda/python:3.8

Presuming your code is in app.py with a handler as the function, you’ll add these two lines too

COPY app.py ./

CMD ["app.handler"]

Dealing with dependencies is pretty straightforward. As with all Python application, all the modules you use should be defined in requirements.txt like this:

google-cloud-firestore

google-auth

To make sure all dependencies are correctly bundled, just add these two lines to your Dockerfile

COPY requirements.txt ./

RUN pip install -r requirements.txt

It’s as simple as that.

Building & Testing Locally

The huge benefit to working with containers is the ability to completely test your Lambda function locally before deploying to your AWS account. Let’s take a look into how to do that:

Build Your Container

The standard docker build syntax applies here.

docker build -t my-container-image .

The commmand above creates ‘my-container-image’ in the local directory

Running Locally

Typically you would run your container as follows

docker run -p 9000:8080 my-continer-image:latest

However, it’s very likely that you’re interacting with AWS services (in my case S3), so you will need to ensure the access credentials exist for your function documentation here. These credentials can be set using the following environment variables:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_SESSION_TOKENAWS_REGION

Provided you’ve got the credentials set on a user (which should be using the same policy as your Lambda would) you can pass them through as following when running locally:

|

|

Finally, to invoke events, you can pass a payload through while the container is running as follows:

curl -XPOST "http://localhost:9000/2015-03-31/functions/function/invocations"

-d '{"param1":"parameter 1 value"}'

Note that you can also use the AWS_LAMBDA_FUNCTION_TIMEOUT environment variable to set a timeout for your function in milliseconds.

Running your Lamba

Now that you have a working container, you need to deploy it to your AWS account

Deploy Your Container

Authenticate the Docker CLI to your ECR registry

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin <AWS ACCOUNT ID>.dkr.ecr.<REGION>.amazonaws.com

The first time that you are going through this process, you will need to create a repository on ECR with the name that you want to use

aws ecr create-repository —repository-name my-container-image

After this you just need to tag your image before pushing it to ECR

docker tag my-container-image:latest <AWS ACCOUNT ID>.dkr.ecr.<REGION>.amazonaws.com/my-container-image:latest

docker push <AWS ACCOUNT ID>.dkr.ecr.<REGION>.amazonaws.com/my-container-image:latest

Your container is now ready to be used in a Lambda function.

Creating Your Lambda Function

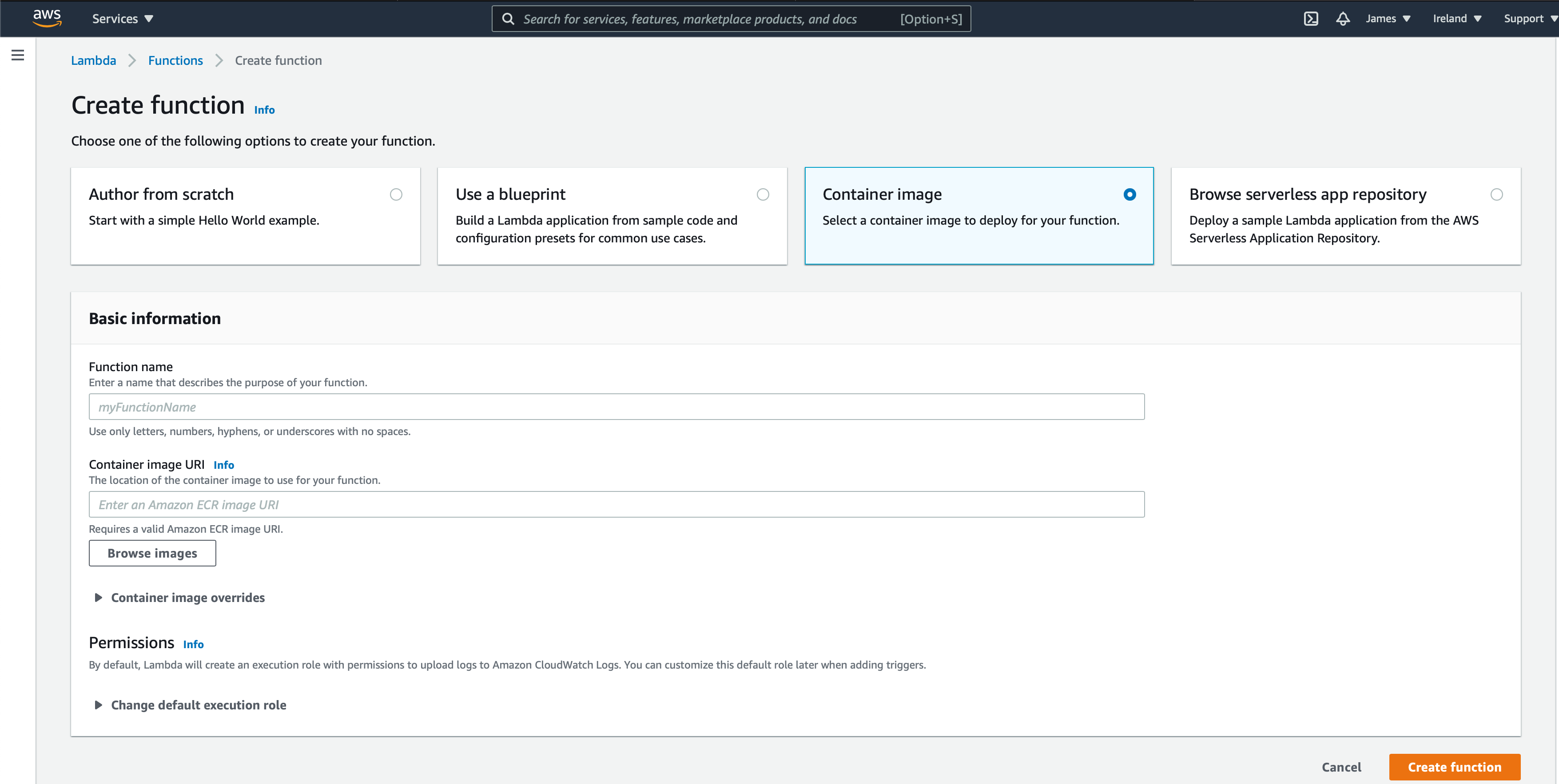

Just open up the AWS console, go to AWS Lambda and click on Create Function

From there you’ll be able to create a function from a container image, and simply select the image you need from ECR through Browse Images.

Having taken this console based approach, you are obviously going toneed to attach a role to your Lambda at a minimum. If you want to be more disciplined, and less console dependent, on how you create your container based Lambda functions, check out [https://twitter.com/edjgeek](Eric Johnson’s) post on Using Container Image Support for AWS Lambda with AWS SAM

Extra Resources

As well as the documentation resources linked in this article, make sure to check Chris Munns' excellent talk on Container Image Support in AWS Lambda from re:Invent 2020